Rust vs Go: Memory Management

Let’s look at how two popular programming languages Rust and Go manage memory.

When a program starts, it creates a process with its own address space and threads running on CPU cores. The processor operates on virtual memory - an abstraction managed by the operating system.

For example, in Go, when we create an array:

arr := make([]byte, 100)

The runtime requests a range of virtual addresses, but the physical memory is not allocated immediately; it is allocated only when the data is accessed for the first time.

first := arr[0]

When the first element is accessed, a page fault occurs, and the operating system allocates a physical page, usually 4 KB in size, linking it to the virtual address range.

Stack and heap

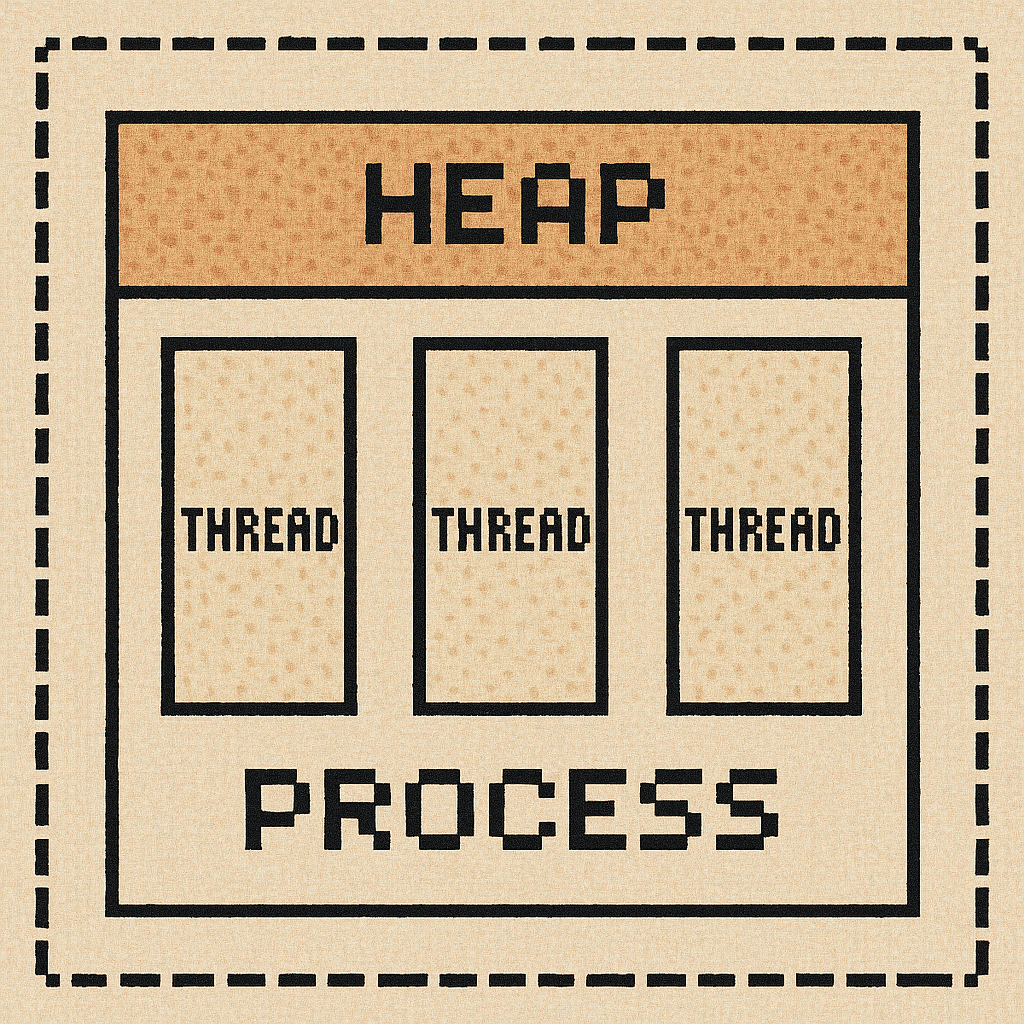

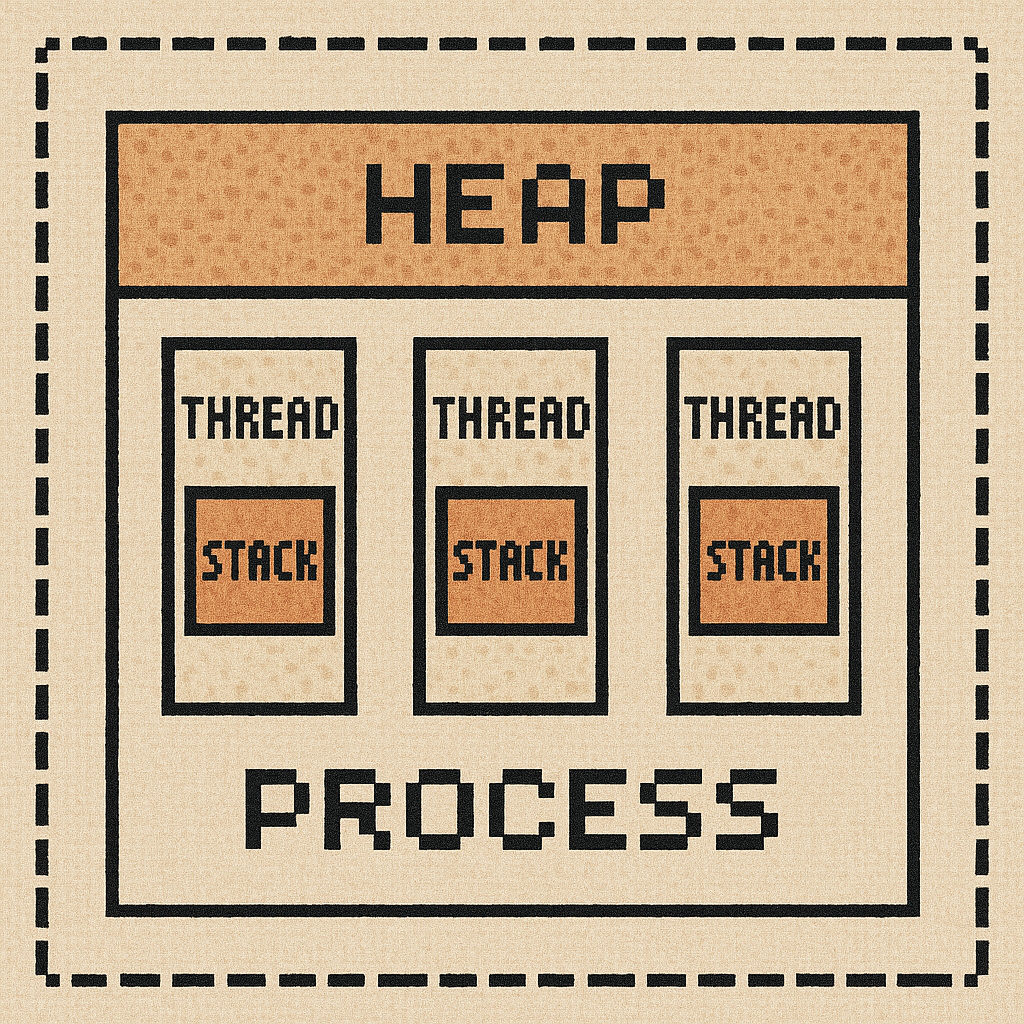

Each process has a shared memory range accessible to all threads, called the heap.

Any thread can work with this memory region, and it can grow dynamically while the program is running.

At the same time, each thread has its own memory region, accessible only to that thread, called the stack.

The stack stores:

- local variables of primitive types

- function arguments

- return addresses (the location from which the function was called and where execution should return after it finishes)

All this data exists only until the function completes, after which the stack is cleared.

How does Go decide where to allocate data?

In Go, the decision is made by the escape analyzer, a compiler phase that determines where specific data will be stored.

For example, for this code:

func add(a, b int) int {

c := a + b

return c

}

The escape analyzer will see that the variable c lives within the function (return c does not return the memory area where c was allocated, but copies its value into the return register), which means it can be placed on the stack.

But in this example:

func newUser() *User {

u := User{Name: "Tom"}

return &u

}

The escape analyzer will decide that since the value is returned by a pointer, the memory region must live after the function ends, so it will place u on the heap.

In summary, in Go, the compiler decides where variables will be stored by analyzing the code.

How does Rust decide where to allocate data?

In Rust, the compiler does not perform escape analysis and does not decide where to store data - the developer does. To place an object on the heap, you must explicitly use a type that allocates memory there, such as Box:

let x = Box::new(5);

Box type by definition guarantees that the value T is stored on the heap. Then, once the developer has made all allocation decisions, the compiler verifies their correctness through ownership analysis and lifetime checking.

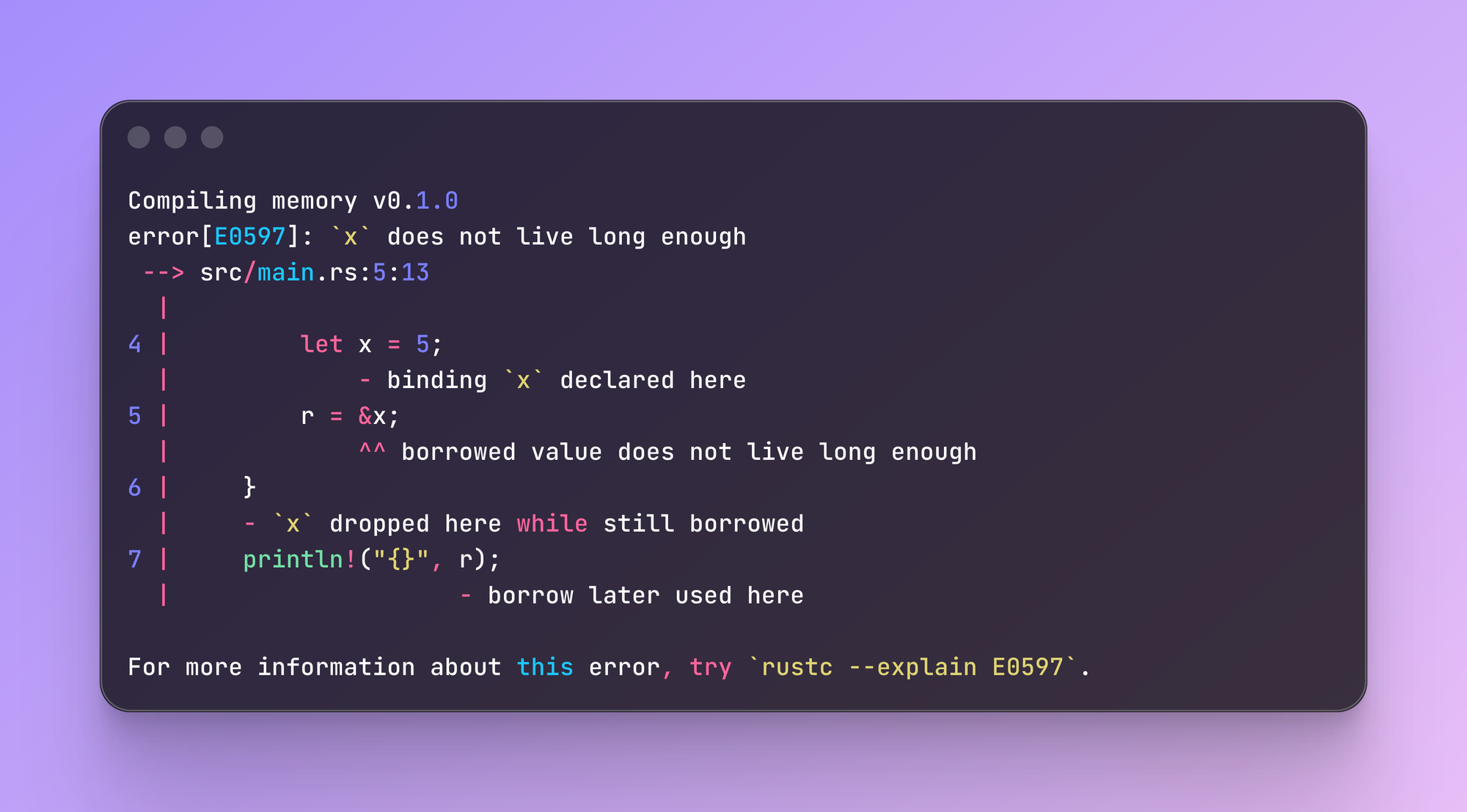

Lifetime analysis

During this phase, the compiler checks that there are no references to an object whose memory has already been freed.

fn main() {

let r;

{

let x = 5;

r = &x;

}

println!("{}", r);

}

For example, in this code the variable x has a shorter scope than r, and the compiler will return an error: r points to a memory cell that will already have been freed:

But in Go, the same example will compile without any errors:

func main() {

var r *int

{

x := 5

r = &x

}

fmt.Println(*r)

}

because the escape analyzer understands that `x` escapes the current block and decides that x must live longer, so it places it on the heap.

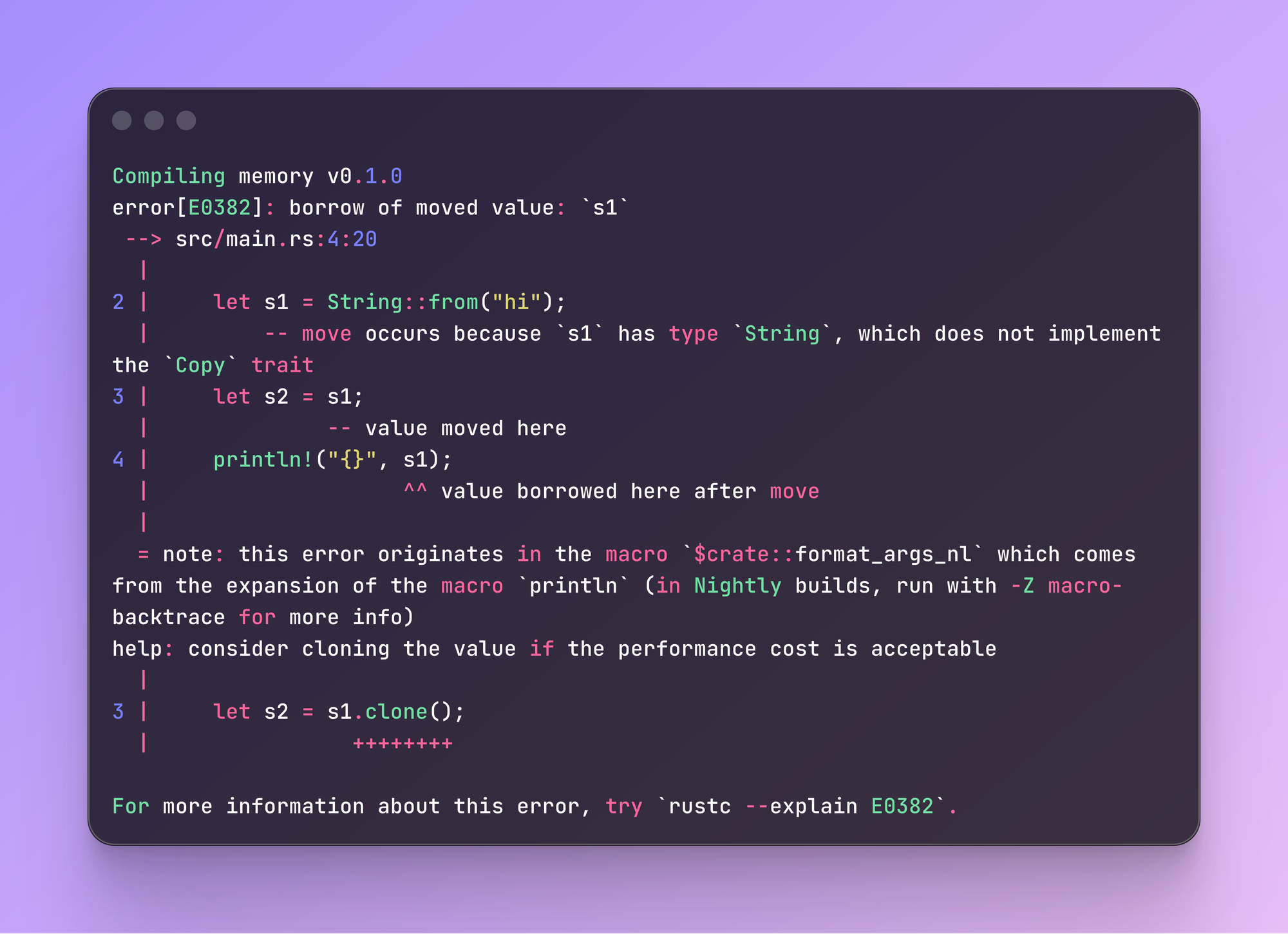

Ownership analysis

After the lifetime checking phase comes the ownership analysis phase. In Rust, each variable is the owner of its data. When the owner goes out of scope, drop() is called and the memory is freed. Ownership guarantees that there are no memory leaks or double frees.

fn main() {

let s = String::from("hello");

println!("{}", s);

} // call drop()

The ownership rules are as follows:

- Each resource always has one owner

- When ownership is transferred, the previous owner loses access

- Data is deleted when the owner goes out of scope

Let’s look at an example:

fn main() {

let s1 = String::from("hi");

let s2 = s1;

println!("{}", s1);

}

Here we transfer ownership, and then try to print the data from the previous owner - the compilation fails with an error:

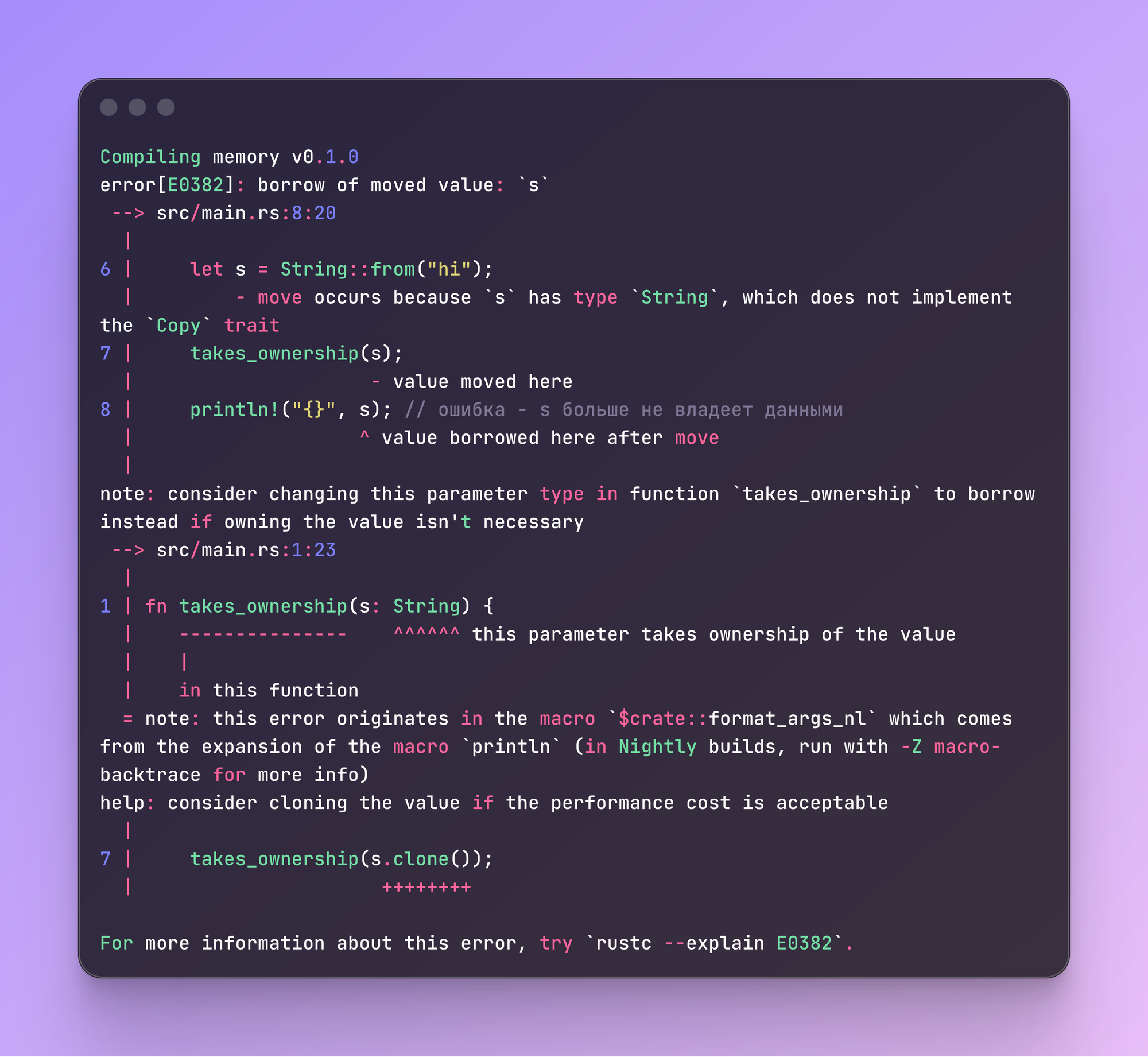

The same happens when passing a value to a function:

fn takes_ownership(s: String) {

println("{}", s);

}

fn main() {

let s = String::from("hi");

takes_ownership(s);

println!("{}", s);

}

The error:

If you write in Go, the example above might seem confusing. Why doesn’t s still own the data, since inside takes_ownership() the data is only printed?

That’s because when the value is passed to the function, ownership is transferred to the parameter s. When the function ends, s is destroyed. This guarantees that the same resource will not be freed twice.

To make the example work, we need to pass the value by reference:

fn takes_ownership(s: &String) {

println!("{}", s);

}

fn main() {

let s = String::from("hi");

takes_ownership(&s)

println!("{}", s);

}

This is how Rust ensures memory safety at compile time.

Why doesn’t Rust just move an object from the stack to the heap automatically, like Go does?

We’ve already discussed how memory cleanup works in Rust - through developer control and compiler errors. But how does it work in Go?

That’s where the garbage collector (GC) comes in. The garbage collector is part of the language runtime that runs in parallel with your program. It removes all objects whose memory has been allocated but that no longer have any references pointing to them.

Thanks to this, we don’t have to worry about where an object is stored. However, we must always remember that the GC runs alongside our program and consumes CPU resources. Rust, on the other hand, spends resources only on executing code, ensuring at compile time that all allocations are properly placed and freed at the right moment.

Let’s look at an example to see when and how much CPU each language uses.

Below is a Go program that performs 1,000,000 allocations and keeps part of them in a shared buffer:

package main

import (

"fmt"

"net/http"

_ "net/http/pprof"

"time"

)

var keep [][]byte

func main() {

go func() {

fmt.Println("pprof on http://localhost:6060/debug/pprof/")

_ = http.ListenAndServe("localhost:6060", nil)

}()

go func() {

for i := 0; i < 1_000_000; i++ {

buf := make([]byte, 1024)

if i%1000 == 0 {

keep = append(keep, buf)

}

if i%100_000 == 0 {

fmt.Println("iter", i)

time.Sleep(1000 * time.Millisecond)

}

}

fmt.Println("done, kept:", len(keep))

}()

select{}

}

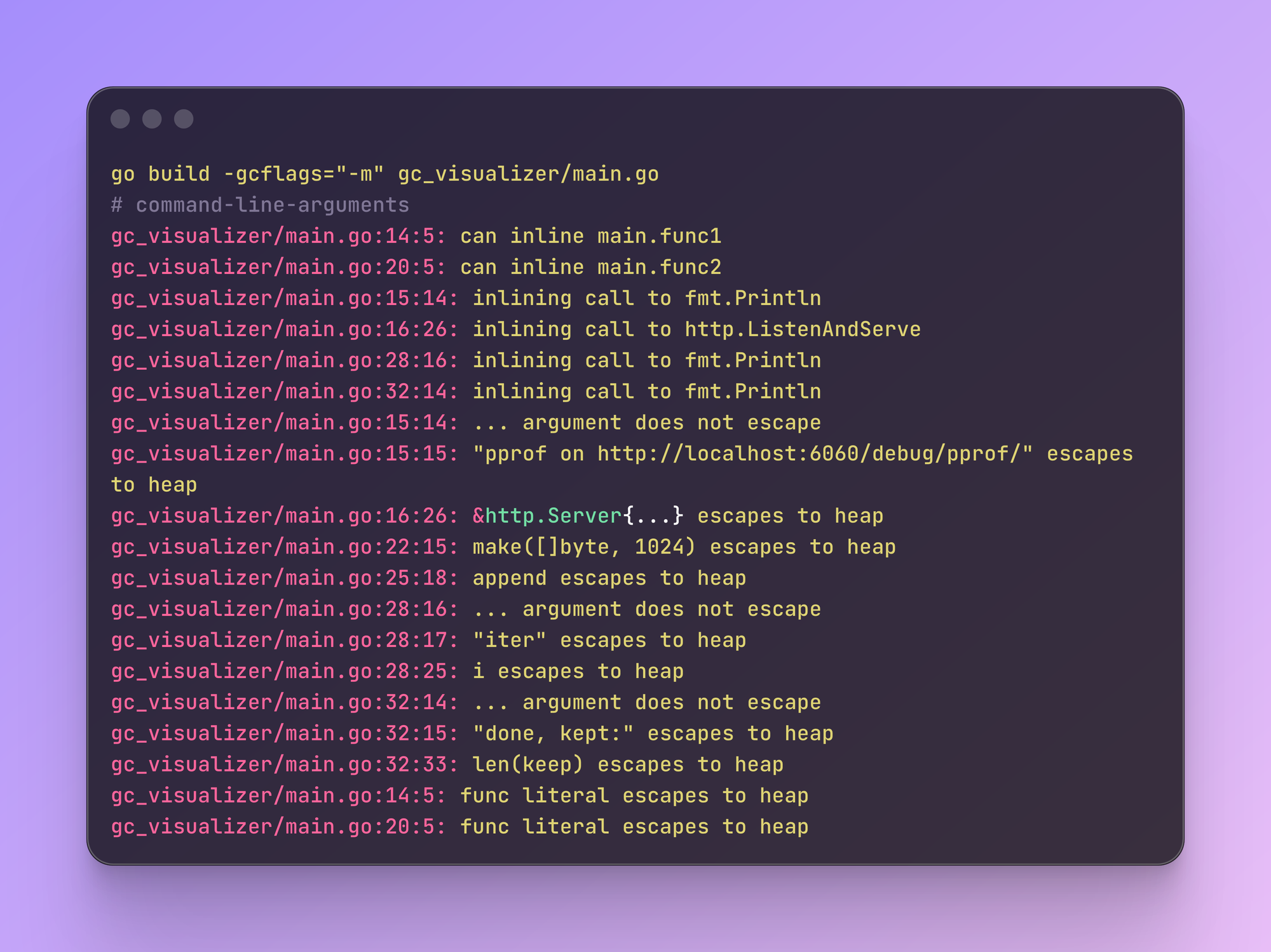

Go allows us to see the decisions made by the escape analyzer. To do that, we run go build with the -m flag:

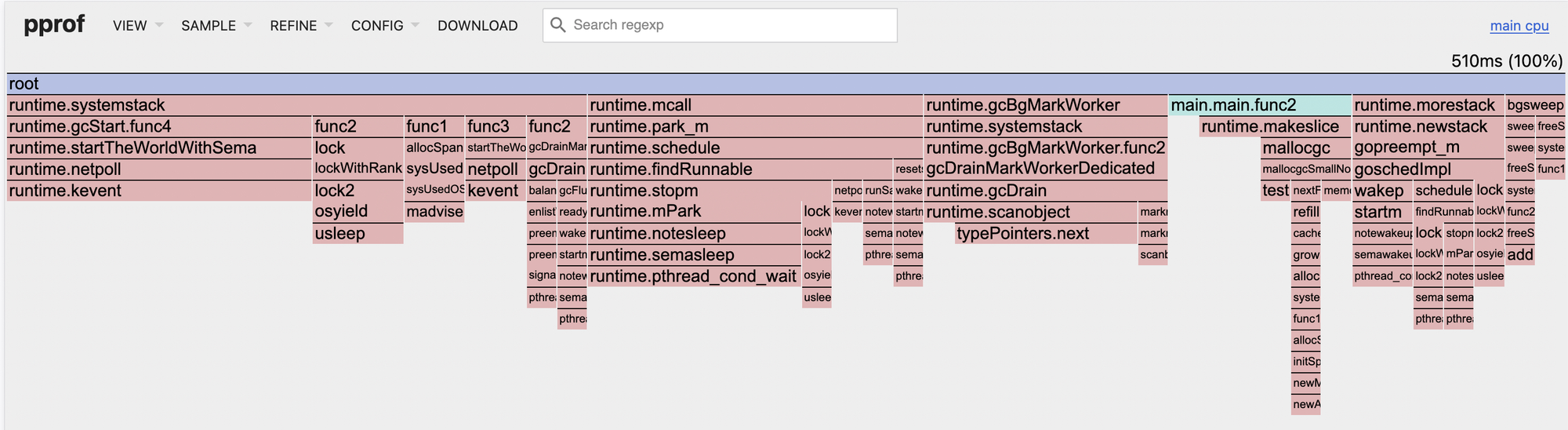

Now let’s see how much total CPU time the GC consumed. In one terminal, we run the program with GODEBUG=gctrace=1:

GODEBUG=gctrace=1 go run gc_visualizer/main.goIn another terminal, we start pprof:

go tool pprof -seconds 20 -http=:8080We can see that all CPU samples took 510 ms:

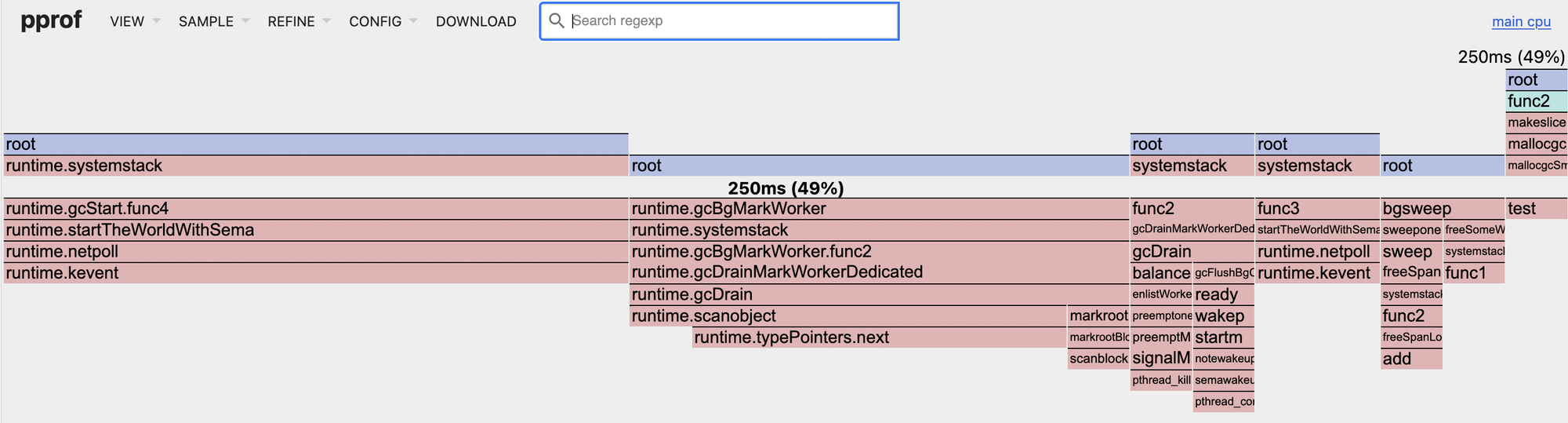

Here is the same profile, but filtered to show only the methods used by the GC:

Now let’s look at the Rust code that performs similar logic:

use std::{thread, time::Duration};

use pprof::ProfilerGuardBuilder;

use pprof::protos::Message; // для write_to_writer()

fn main() {

let guard = ProfilerGuardBuilder::default()

.frequency(100)

.build()

.unwrap();

let mut keep: Vec<Vec<u8>> = Vec::new();

for i in 0..1_000_000u32 {

let buf = vec![0u8; 1024];

if i % 1000 == 0 { keep.push(buf); }

if i % 100_000 == 0 { println!("iter {i}"); thread::sleep(Duration::from_millis(1000)); }

}

println!("done, kept: {}", keep.len());

if let Ok(report) = guard.report().build() {

let mut f = std::fs::File::create("cpu.pb").unwrap();

report.pprof().unwrap().write_to_writer(&mut f).unwrap();

}

}

cargo.toml:

[profile.release]

debug = true

[dependencies]

pprof = { version = "0.15", features = ["flamegraph", "protobuf-codec"] }

We run the program with:

RUSTFLAGS="-C force-frame-pointers=yes" cargo run --release

Then open it in pprof:

go tool pprof -http=:0 ./cpu.pb

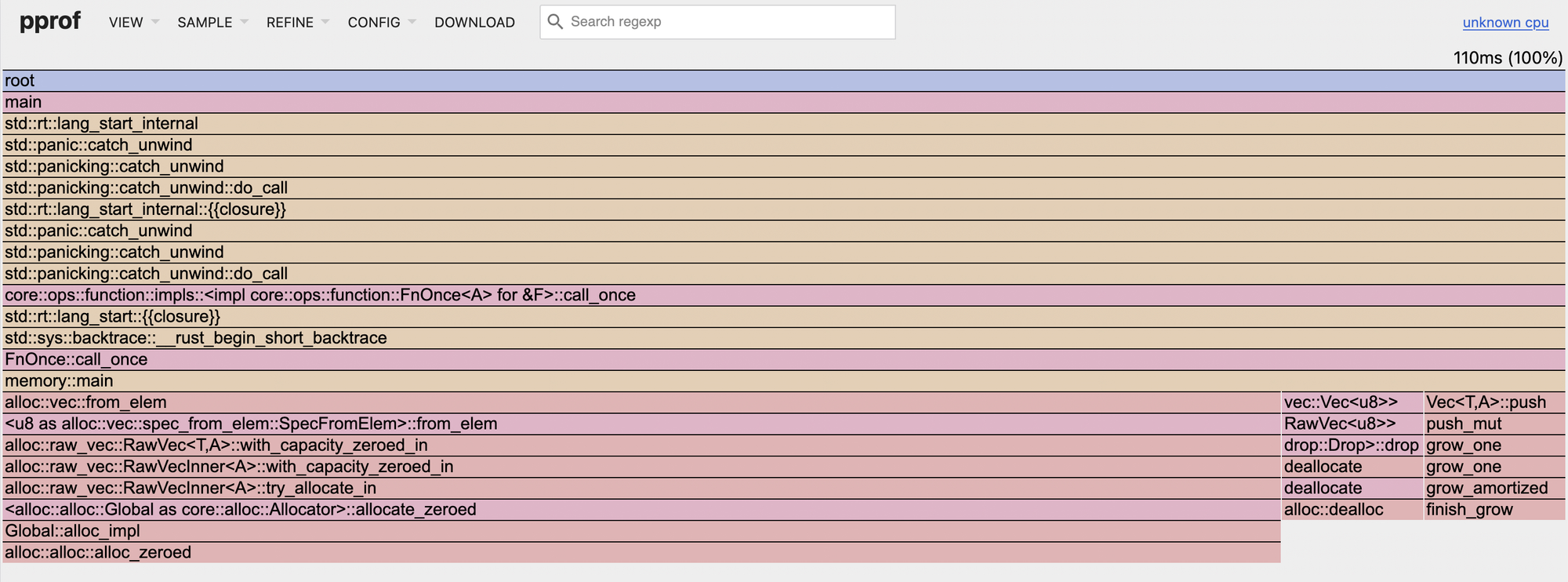

And we can see that the Rust profile shows 110 ms of CPU time:

There are no runtime.gc* calls, unlike in Go. Objects are freed immediately when they go out of scope.

In Rust, there is no background garbage collector, so we won’t see mark/sweep cycles or calls like gcBgMarkWorker. All memory management consists of explicit allocations, deallocations, and drop() calls.

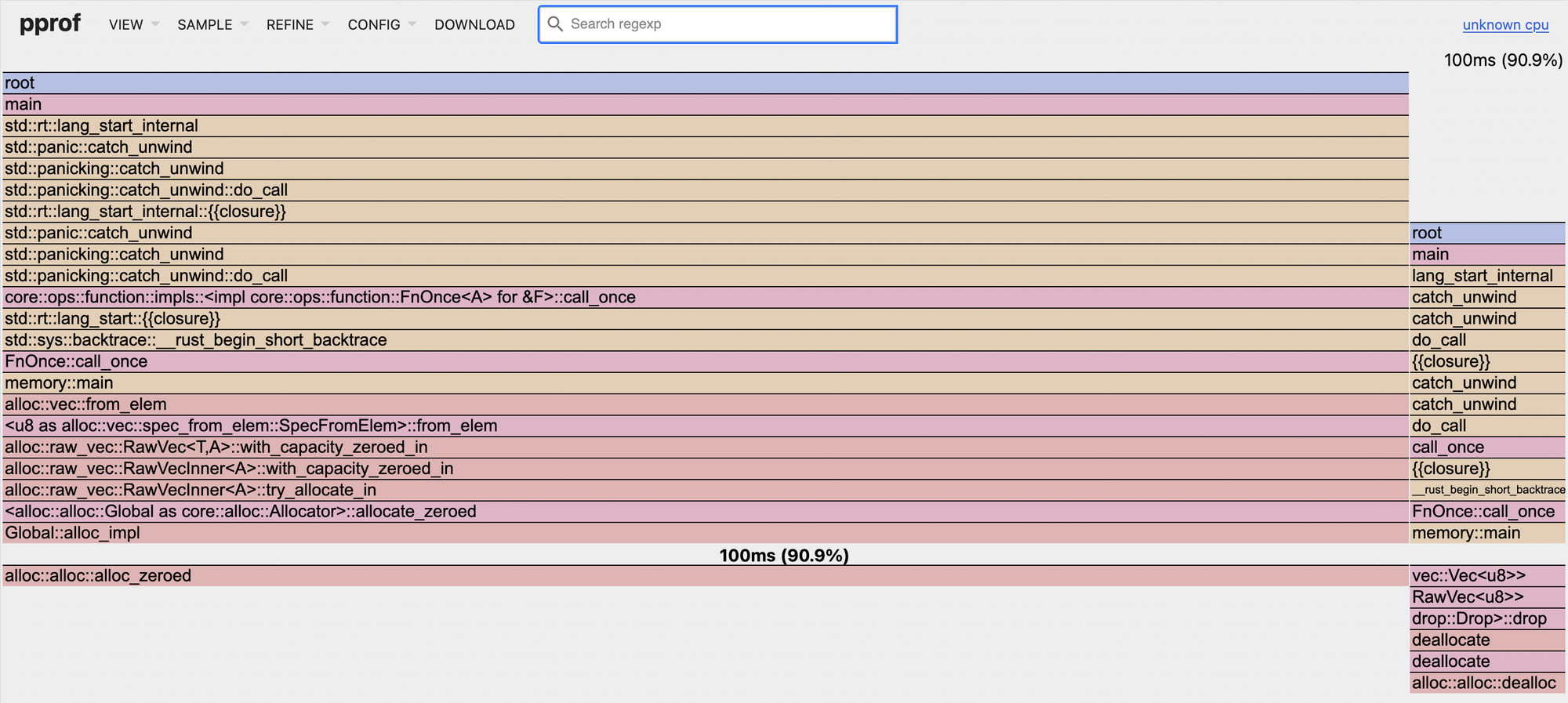

Now let’s look at the same samples, but filtered to show only the methods that work with memory:

Allocations took about 90% of the total CPU time. However, the overall execution time is almost five times lower than that of the equivalent program in Go.

It’s important to understand that directly comparing such code is not entirely accurate. CPU time can depend on many factors, and the code itself behaves differently in each language. The main takeaway is that you should understand how the GC works in Go and remember that when there are many heap allocations, the GC’s runtime and CPU load will be significantly higher.

Conclusion

Go saves your time now, Rust saves CPU time later. But remember: the best language is the one that saves the most valuable resource in your specific project - and for each project, that resource will be different.

Don’t forget to subscribe - just leave your email below to receive notifications about new articles.

I also run a Telegram channel with a digest of Go-related materials:

And a personal Telegram channel where I share notes, tools, and experiences: